Full Demonstration

- yingnan4

- 2022年10月18日

- 讀畢需時 1 分鐘

已更新:2022年10月19日

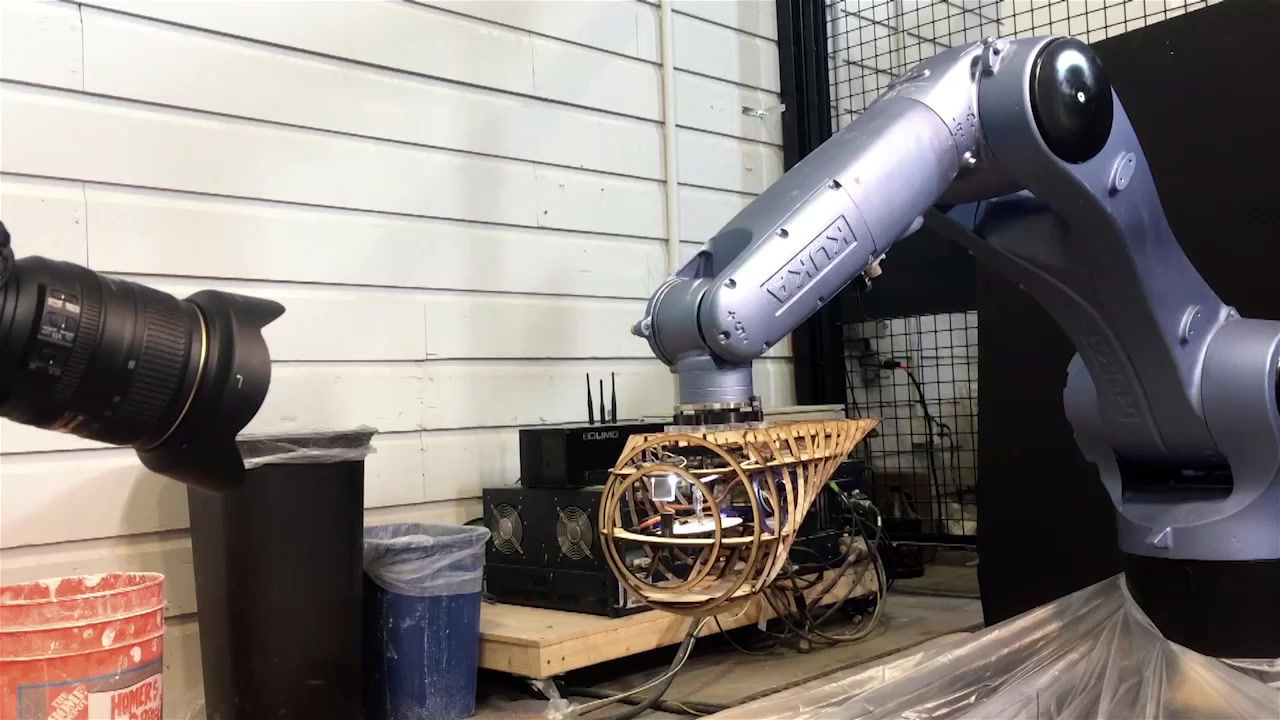

The full demonstration runs on distributed computing typology, that is, robot with raspberry pi is the master collecting all data and running the computation, while PC is being used as monitor to give commands.

On the Pi:

Under package '000_combined_demo', use the 2 launch files named '000_SLAM.launch' and '001_fiducial.launch' to initiate lidar and depth cam, as well as start navigation stack and visual driver node.

On the PC:

Under package 'formal01_rplidar_hector', use the launch file '000_Rviz_SLAM.launch' to initiate Rviz locally to observe data.

SSH to raspberry pi:

ssh dofbot@192.168.1.78

Procedure:

SLAM node and fiducial can be left running.

Call fiducial driver node to locate material and installation SEPARATELY. This is to locate the wall module and taget at 2 times. run and kill after done.

After location, use grabbing and installing python commands for the arm.

/bin/python3 /home/dofbot/000_robot_ws/src/000_combined_demo/scripts/01_grabbing.py

/bin/python3 /home/dofbot/000_robot_ws/src/000_combined_demo/scripts/02_installing.py

留言